Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Beyond CUDA: GPU Accelerated Python on Cross-Vendor Graphics Cards with Kompute and the Vulkan SDK - YouTube

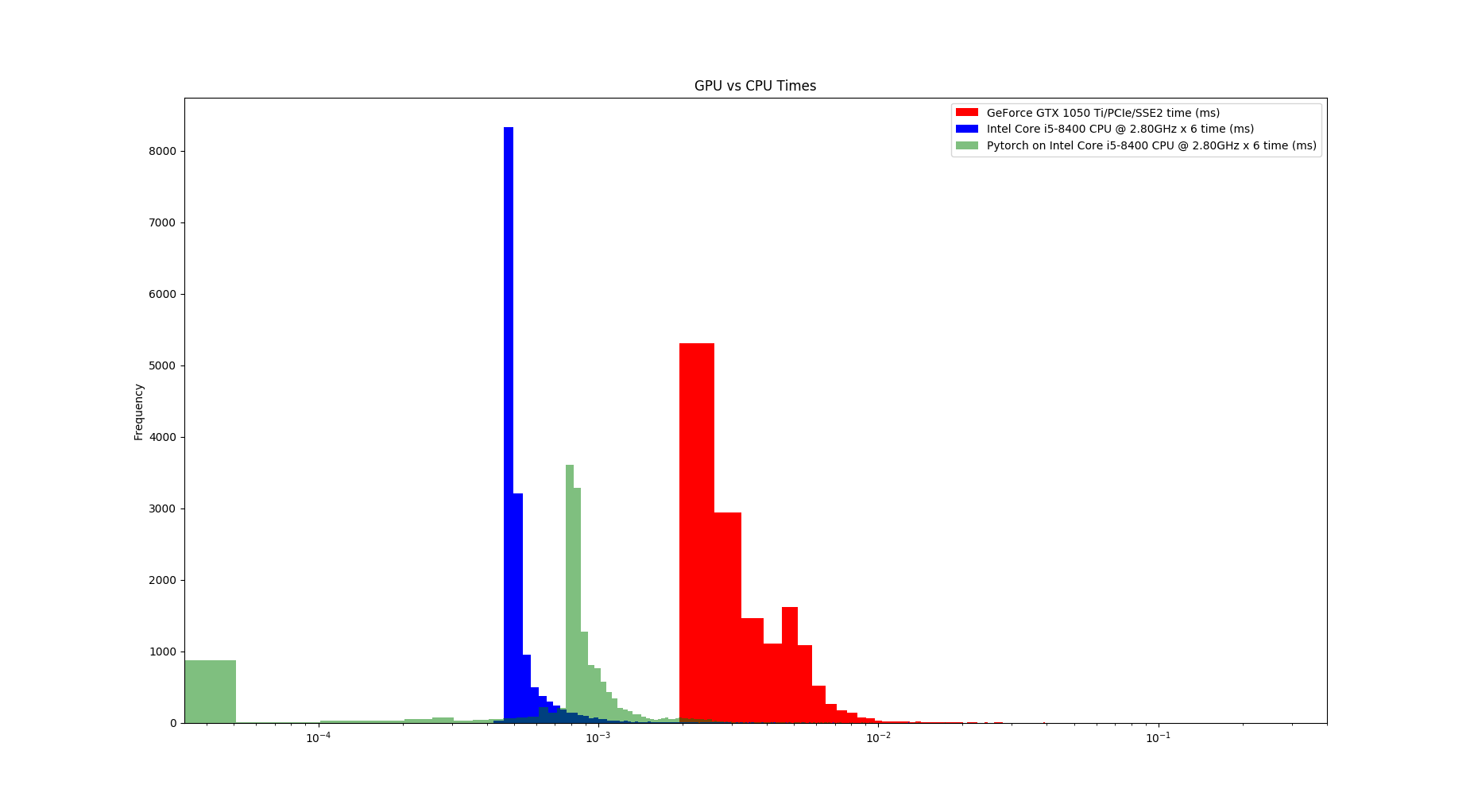

3.1. Comparison of CPU/GPU time required to achieve SS by Python and... | Download Scientific Diagram

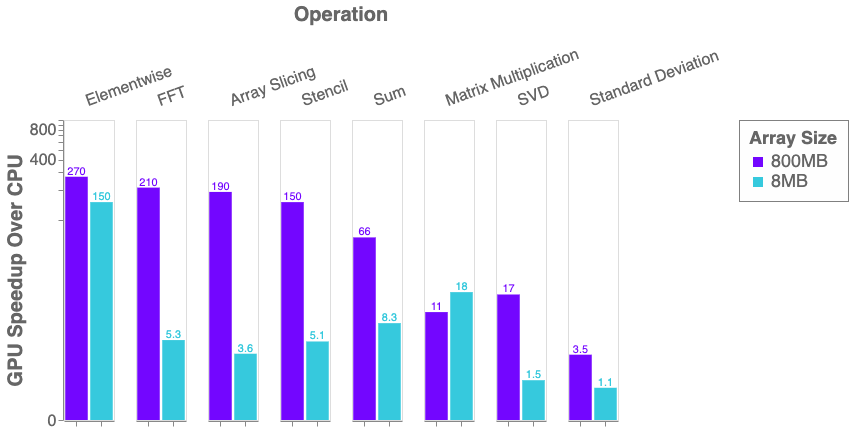

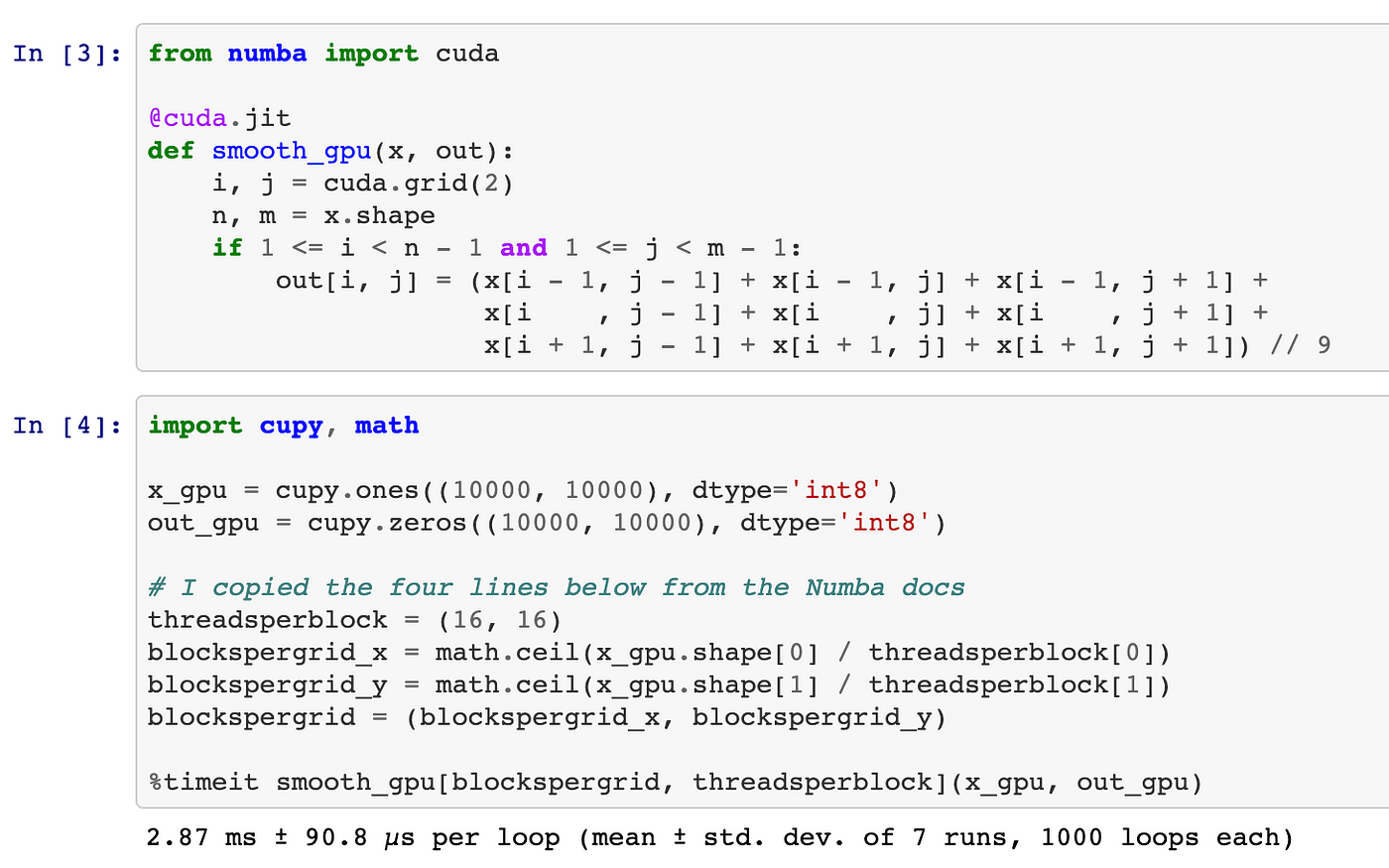

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

![Best Practices in Python: CPU to GPU [online, CPUGPU] Registration, Thu, 7 Mar 2024 at 9:00 AM | Eventbrite Best Practices in Python: CPU to GPU [online, CPUGPU] Registration, Thu, 7 Mar 2024 at 9:00 AM | Eventbrite](https://img.evbuc.com/https%3A%2F%2Fcdn.evbuc.com%2Fimages%2F660157339%2F1510279949353%2F1%2Foriginal.20231218-143316?w=600&auto=format%2Ccompress&q=75&sharp=10&rect=0%2C0%2C2160%2C1080&s=028d4ae8a8065067bf1ed57e0850eaa3)